How does the culture of science change and improve? Many people have identified shortcomings in core social processes of science, such as peer review, how grants are awarded, how people are selected to become scientists, and so on. Yet despite often compelling criticisms, strong barriers inhibit widespread change in such social processes. The result is near stasis, and apathy about the prospects for improvement. People sometimes start new research institutions intended to do things differently; unfortunately such institutions are often changed more by the existing ecosystem than they change it. In this essay we sketch a vision of how the social processes of science may be rapidly improved. In this vision, metascience plays a key role: it deepens our understanding of which social processes best support discovery; that understanding can then help drive change. We introduce the notion of a metascience entrepreneur, a person seeking to achieve a scalable improvement in the social processes of science. We argue that: (1) metascience is an imaginative design practice, exploring an enormous design space for social processes; (2) that exploration aims to find new social processes which unlock latent potential for discovery; (3) decentralized change must be possible, so outsiders with superior ideas can't be blocked by established power centers; (4) ideally, change would align with what is best for science and for humanity, not merely what is fashionable, politically popular, or media-friendly; (5) the net result would be a far more structurally diverse set of environments for doing science; and (6) this would enable crucial types of work difficult or impossible within existing environments. For this vision to succeed metascience must develop and intertwine three elements: an imaginative design practice, an entrepreneurial discipline, and a research field. Overall, it is a vision in which metascience is an engine of improvement for the social processes and ultimately the culture of science.

Imagine you're a science fiction author writing a story depicting a scientific discovery made by an alien species. In your story you show the alien scientists up close – how they work, how they live. Would you show them working within institutions resembling human universities, with the PhD system, grant agencies, academic journals, and so on? Would they use social processes like peer review and citation? Would the alien scientists have interminable arguments, as human scientists do, about the merits of the h-index and impact factor and similar attempts to measure science?

Almost certainly, the design of our human scientific social processes has been too contingent on the accidents of history for the answers to those questions to all be "yes". It seems unlikely that humanity has found the best possible means of allocating scarce scientific resources! We doubt even the most fervent proponents of, say, Harvard or the US National Science Foundation would regard them as a platonic ideal. Nor does it seem likely the h-index and similar measures are universal measures of scientific merit.

This doesn't mean the aliens wouldn't have many scientific facts and methodological ideas in common with humanity – plausibly, for instance, the use of mathematics to describe the universe, or the central role of experiment in improving our understanding. But it also seems likely such aliens will have radically different social processes to support science. What would those social processes be? Could they have developed scientific institutions as superior to ours as modern universities are to the learned medieval monasteries?

The question "how would aliens do science?" is fun to consider, if fanciful. But it's also a good stimulus for immediately human-relevant questions. For instance: suppose you were given a large sum of money – say, a hundred million dollars, or a billion dollars, or even ten or a hundred billion or a trillion dollars – and asked to start a new scientific institution, perhaps a research institute or funder. What would you do with the money?

Would you aim to incrementally improve on the approaches currently taken by Harvard, the NSF, HHMI, and so on? Or would you attempt to create radically new institutions, which transcend existing institutions, a new organizational approach to doing science? Not just in the sense of new scientific ideas or methods in specific fields, but rather new social processes for science; that is, new ways to select and support human beings to make discoveries? Our distant ancestors did not, after all, anticipate the immense improvements possible in humanity's discovery ecosystem1. Perhaps, with sufficient insight, further transformative improvements are possible?

These questions aren't just hypothetical. Lots of concrete work has been done on the question: "what's wrong with the social processes of science, and how can we improve them?" Some of this work is in papers, essays, and manifestos explaining how to fix or improve peer review or funding or hiring or the career structure of science, and so on. Early entries in the genre include such celebrated works as Francis Bacon's The New Atlantis and Novum Organum, and Vannevar Bush's Science – The Endless Frontier. And, of course, there is much modern work: from fields including the economics of science funding, the science of science, science and technology studies, science policy, and others; in the mass media; and on social media and in informal conversation amongst scientists.

Alongside these proposals, many adventurous people are building new and sometimes daringly different scientific organizations. There are big new research institutes, such as the Arc Institute, DeepMind, and Altos. There are tiny pirate insurgencies, such as the Center for Open Science, DynamicLand, EleutherAI, and dozens or perhaps even hundreds more2. There are new funders, such as Convergent Research, Fast Grants, the FTX Foundation, VitaDAO, and many more. Of course, many of these are differentiated in part by their specific research focus: for example, DeepMind was one of the first large organizations focused on artificial intelligence research. But many also have theses based in part on new or unusual approaches to the basic social processes of science. And when we talk to the founders of such organizations they often express hope that not only will their organization succeed, it will be a beacon, succeeding so spectacularly that the underlying social ideas will spread widely, improving humanity's discovery ecosystem as a whole.

How realistic is that hope? Does our discovery ecosystem improve in response to successful experiments with new social processes? Or is it resistant to change, only improving slowly? In a nutshell, this essay explores the question: how well does the discovery ecosystem learn, and can we improve the way it learns? As we address this question, many related questions naturally arise: does the discovery ecosystem enable the rapid trial of a multitude of wildly imaginative social processes? Or are only tiny, incremental changes ever possible? Can outsiders with great ideas displace existing approaches? Or can change only come from people and organizations who already have enormous power?

What we'll find is a discovery ecosystem in a state of near stasis, with strong barriers inhibiting the improvement of key social processes. We believe it's possible to change this situation. In this essay we sketch a vision in which metascience drives rapid improvement in the social processes of science. This vision requires a strong theoretical discipline of metascience, able to obtain results decisive enough to drive the adoption of new social processes, including processes that may displace incumbents. It also requires a strong ecosystem of metascience entrepreneurs, people working to achieve scalable change in the social processes of science. In some sense, the essay explores what it would mean for humanity to do metascience seriously. And it's about placing that endeavor at the core of science. We believe the net result will be a portfolio of social processes far more structurally diverse than today, enabling crucial types of work difficult or impossible within existing environments, and so expanding the range of possible discoveries.

As far as we are aware, these questions have not previously been explored in depth. To make the questions more concrete, let's sketch a few specific examples of unusual social processes that could be (or are being) trialled today by adventurous funders or research organizations3. These sketches are intended as brief illustrative examples, to evoke what we mean by "changed social processes". Though the examples are modest and conservative – indeed, some ideas may be familiar to you4, though perhaps not all – versions scaled out across science would significantly change the culture of science. It's a long list, to emphasize the many diverse opportunities for imaginative change. Later in the essay we develop deeper ways of thinking that generate many more ideas for change.

Fund-by-variance: Instead of funding grants that get the highest average score from reviewers, a funder should use the variance (or kurtosis or some similar measurement of disagreement5) in reviewer scores as a primary signal: only fund things that are highly polarizing (some people love it, some people hate it). One thesis to support such a program is that you may prefer to fund projects with a modest chance of outlier success over projects with a high chance of modest success. An alternate thesis is that you should aspire to fund things only you would fund, and so should look for signal to that end: projects everyone agrees are good will certainly get funded elsewhere. And if you merely fund what everyone else is funding, then you have little marginal impact6,7.

Century Grant Program8: Solicit grant applications for projects to be funded for 100 years. Done through an endowment model, the cost would be a small multiple of conventional 5- or 10-year funding. The point is to elicit an important type of intellectual dark matter9: problems of immense scientific value that can't be investigated on short timelines. Inspired by seminal projects such as the CO2 monitoring at the Mauna Loa observatory, the Framingham Heart Study, and the Cape Grim Air Archive.

Tenure insurance: Tenure-track scientists often play it safe in the projects they take on. Encourage people to swing for the fences by offering a large payout if they fail to receive tenure. Supposing 80% of tenure-track faculty receive tenure10, the cost for a large payout would only be a modest addition to an existing benefits package. A premium of $8k per year for 6 years, with a 5x multiplier and reasonable assumptions about interest rates, would result in a payout of over $300k. That's several years of pre-tenure salary in many fields and at many institutions. This suggestion is an instance of two more general patterns: (1) moving risk to parties who can more easily bear it, making the system as a whole less risk averse; and (2) a plausible way to increase people's ambition is to de-risk by improving their fallback options in the event of failure11.

Failure audit: Many funders advocate high-risk, high-reward research, but this is often mere research theater, not seriously meant. For instance, in 2018 the European Research Council issued a self-congratulatory report claiming that: (a) they fund mostly "high risk" work; and (b) almost all the work they fund succeeds, with 79% of projects achieving either a "scientific breakthrough" or a "major scientific advance". If almost every project succeeds, then this is not a definition of "high risk" we recognize12. To prove the seriousness of their intent about risk, funders could run credible independent audits on their grant programs, and if the failure rate for grants persists below some threshold (say, 50%), the program manager is fired13. Or, at a different level of abstraction, the entire funder could be audited, and if the number of grant programs which fails is below 50%, the funder's director is fired.

Acquisition pipeline for research institutes: People often lament the loss of (or large changes in) great private research institutes past – PARC in the 1970s is perhaps the best modern example14. If PARC-in-the-1970s was so great, why didn't the NSF acquire it? An acquisition would have been within their mission, and almost certainly a far-better-than-median use of NSF's funds15. There might well have been political or bureaucratic barriers, but if so the problem lies in politics and bureaucracy, not in the merit of the idea. If public (or philanthropic) acquisition of private research institutes was common, it may incentivize the creation of more outstanding private research institutes.

Pull immigration programs: Moving between countries is an intimidating and arduous exercise, often involving a considerable amount of know-how. There is no a priori reason some enterprising country – let's say Estonia, which has run several innovative experiments in the way they approach immigration – couldn't simply identify outstanding people they'd like as immigrants, and directly recruit them. Imagine a valet shows up on the doorstep of every science olympiad student in the world, with a first-class plane ticket to Estonia, a pre-approved visa, an offer of several years of first-rate private mentoring in whatever field or fields they desire, a stipend, and housing as part of a community of similarly extraordinary students. It'd be interesting to see what the long-run outcomes from such a community would be16.

Open Source Institute: Like a research university, but instead of producing understanding in the form of research papers, it would produce understanding in the form of open source software and open protocols (with a penumbra of concomitant goods, such as prototypes, demos, open data and, yes, papers). Based on the thesis that sometimes important new understanding isn't best expressed in words, but rather in code or protocols. A number of superficially similar programs already operate, but none (as far we know) genuinely change the underlying political economy – the means by which people build their reputation and career – which is the primary point.

Institute for Traveling Scientists: A yacht that sails around the world, boarding and de-boarding scientists in each port. It would be a mobile version of places like the Stanford Center for Advanced Study in the Behavioral Sciences – somewhere stimulating and relaxing for scientists to go on sabbatical, learn a new field, or perhaps write that book they've been meaning to write. Inspired in part by Craig Venter's round-the-world trip for the Ocean Sequencing Project.

Long-shot prizes: Purchase insurance premiums against extremely unlikely possibilities that would transform the world. A proof that P ≠ NP. A proof that P = NP. A constructive algorithm solving NP-complete problems quickly. Cold fusion. True faster-than-light travel. A perpetual motion machine. And so on. The more unlikely the outcome, the larger the prize can be, even for a small premium17. Cheap, unlikely to succeed, and extraordinarily impactful if it led to solutions to such problems18.

Public hall of shame / anti-portfolio: The venture capital firm Bessemer Venture Partners maintains a public anti-portfolio of companies where they had an opportunity to invest, but failed. It includes enormously successful companies such as Apple, Google, Facebook, Tesla, and many others. Each failure is accompanied by a short story naming the Bessemer partner who had the opportunity to invest but didn't, and describing (often fancifully and amusingly) why they failed to invest. Every science funder, every university, and every scientific journal in the world should maintain a similar anti-portfolio. Alternately, a collective anti-portfolio could be constructed by a third party willing to tolerate some opprobrium. It wouldn't be popular19. But done well it would be invaluable.

Interdisciplinary Institute: Most proposals for interdisciplinarity are tepid. Take interdisciplinarity seriously, by setting up an institute which identifies (say) 30 different disciplines, and then hires three people to work at the intersection of every possible pairing of disciplines. That's just 1305 people – a large program, but tiny on the scale of modern science. This would be a deliberately variance-inducing strategy. Most pairings of disciplines would produce little, but there are likely a few where great discoveries would unexpectedly be made through the combination. Those few would pay for all the rest. A less expensive approach would be to sample from randomly chosen pairs of disciplines, with the disciplines potentially coming from a much longer list.

At-the-Bench Fellowship: In their heyday, senior scientists at places such as Bell Labs and Cambridge's Laboratory for Molecular Biology often carried out research work themselves, or in direct, hands-on collaboration with 1-3 others20. Yet modern universities often strongly encourage scientists to take on a managerial role, applying for grants but being hands off in research work. This Fellowship would fund senior scientists to spend essentially all their time actually doing science. The thesis here is that for some types of work, important discoveries are most likely if done by someone highly skilled, with a deeply developed affinity for some part of nature. Put another way, the thesis is that for some scientists there are increasing returns to focused expertise, not the diminishing returns assumed in the conventional scientist-becomes-manager-of-a-large-group model.

Printing press for funders: An entity endowed with $10 billion could spin out new funders each year; it could spin out, for instance, a single funder with a roughly $500 million endowment, perhaps running a competition to find the operators of the new funder. Or it could spin out a larger number of smaller funders. If each new funder had a radically different thesis, this could significantly increase the structural diversity of science; and perhaps increase the diversity of ambient working environments available to scientists. It would also be possible to set up a similar kind of printing press for research organizations, mutatis mutandis. And, perhaps, to set up sunset clauses for the organizations, so they don't permanently occupy organizational space in the discovery ecosystem; organizational longevity would then necessarily be through people and ideas being passed to future organizations, not organizational inertia.

Excitement quotient for funders: Scientists often apply for grants on the basis of what they believe is fundable, rather than with their best ideas21. We've spoken with scientists who tell us "I know I can get funding for many fashionable-but-unimportant projects, but I can't get funding for the work I think is most important". Why should a funder or anonymous peer reviewer know better than the scientist how they should use their skills? It has the flavor of the busybody stranger who tells a parent they're parenting wrong. Many funders effectively give veto power to such strangers. One approach to partially address this is for an independent agency to sample people applying to different funders, asking: "how excited were you about this grant application?" They can then publicly rate different funders by comparing excitement scores. This would place pressure on funders to fund work applicants were excited about, and raise questions if it was mostly pro forma.

With these examples in mind, we may restate the basic questions of the essay. Suppose, for instance, that the first of these ideas, "fund-by-variance", was given a serious trial, perhaps with multiple rounds of debugging and improvement. Suppose it was found that when implemented well it was a decisive improvement over the committee-based peer review approach used today by many funders. Would it then be adopted broadly? Or would other institutions ignore or resist it? In a healthy, dynamic discovery ecosystem it would spread widely, displacing inferior methods when appropriate. By contrast, in a static ecosystem, even if the early trials were extremely successful, other institutions would be slow to respond, or resistant to change. They would get hung up over whether the approach came from the "right" originator, or was prestigious enough. In a healthy discovery ecosystem the improved idea could come from anywhere.

In early drafts of this essay we were hesitant about writing the concrete list of ideas above. We worried that it would anchor readers on "these are the changes Nielsen and Qiu are arguing for". But the individual programs are not the point; indeed we'll suggest many more (and sometimes deeper) ideas later in this essay. Rather, the point is that a flourishing ecosystem would rapidly generate and seriously trial an enormous profusion of ideas, including many ideas far more imaginative than anything listed above. The best of those ideas would be rigorously tested, iterated on, debugged, and scaled out to improve the entire discovery ecosystem. Indeed, if truly bold ideas were being trialled, then they would include many ideas we would at first disapprove of, but sometimes the evidence for them would be so strong that we'd be forced to change our minds.

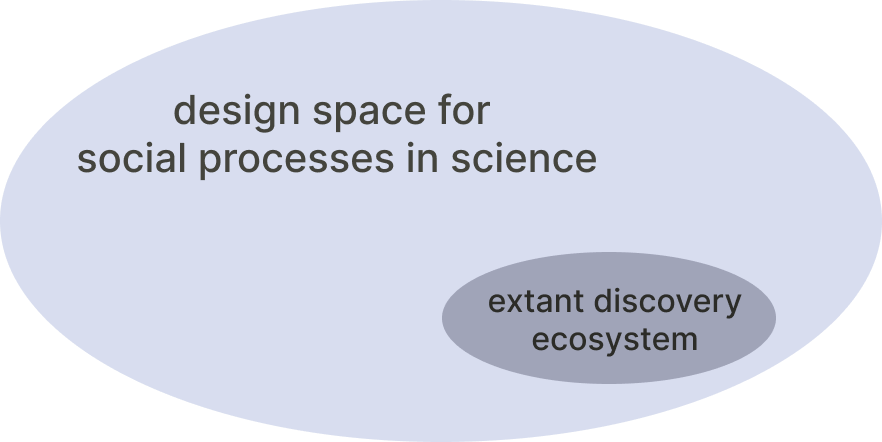

As stated above, the focus of this essay is how the discovery ecosystem improves. Part of the motivation for this focus is the belief that the design space for promising new social processes is vast:

We won't prove this belief. But we'll try to make it plausible. In Part 1 of the essay we'll discuss heuristics for exploring this metascience design space. These heuristics arise out of plausible models of how human beings make discoveries. Indeed, all the social processes of science reflect and are grounded in such models – often implicit or informal folk theories – of how discovery happens. Weak metascientific ideas result in weak social processes; stronger metascientific ideas result in stronger processes. Insofar as we can improve our metascientific theories, we can improve the way human beings make discoveries. A good way to develop that understanding is to explore boldly in the design space above, understanding which ideas do and don't work, and why.

Throughout this introduction we've used many different-but-related terms, talking about changing the social processes of science; changing the culture of science; changing the institutions of science; changing the discovery ecosystem; and so on. From now on we'll use "social processes of science" as an informal catchall. By that we mean the institutional practices, incentives, norms (and so on) widely used in science. So when we talk about change to the social processes of science we are talking about changes to things like peer review, or hiring practices, or how funders approach risk, as well as broader ideas, such as the "pull immigration" or "printing press for funders" or "anti-portfolio" ideas mentioned above. The phrase "social processes of science" is unfortunately unwieldy. But it is nonetheless very helpful to have such a catchall. We will save the use of other terms for when we have a more specific need.

As noted earlier, the essay is an entry in the field of metascience. This still emerging field overlaps and draws upon many well-established fields, including the philosophy, history, and sociology of science, as well as newer fields such as the economics of science funding, the science of science, science policy, and others. While we draw on all these fields, there are notable differences in our focus. Unlike the philosophy of science, we are more concerned with social processes than methodology. The two are intertwined, so this is a difference of degree, not kind, but is nonetheless real. Of course, fields such as the sociology of science and the science of science do focus on social processes, but the focus is primarily descriptive, not imaginative design and active intervention, as discussed in our work. The exception is science policy, which has design and intervention as core goals. However, in the science policy world interventions are often focused on what is practical within existing power structures. We shall be concerned with more a priori questions of principle, and enabling decentralized change, i.e., change that may occur outside existing power structures. For all these reasons we think of the essay as simply part of metascience.

One factor not discussed in the main body of the essay is the relationship between metascience and several external factors affecting the future of science (artificial intelligence, the rise of China and India, the colonization of space, and intelligence augmentation). We discuss these briefly in an Appendix to the essay. The focus in the body of the essay is more endogenous to science.

Underlying the essay is the assumption that improved social processes can radically transform and improve science. Most scientists we've spoken with agree with at least a weak form of this assumption. For instance, many strongly advocate metascientific principles like: the importance of freedom of inquiry for scientists; or that it strengthens science if outsiders can overturn established theories on the strength of evidence, not their credentials. For science to work well, such ideas must be expressed, even if imperfectly, in the design of the social processes of science. As said above, the quality of our social processes (and of our institutions) is determined by the quality of the metascientific ideas they embody.

But our assumption in this essay is much stronger than that weak form. Again: we believe improved social processes can radically transform and improve science. It's not so obvious this is true. Some scientists we talk with are excited by this idea, and agree that new social processes may be transformative. Others sharply disagree, telling us that broad, ecosystem-level social changes make little substantive difference to the actual science. Indeed, several notable scientists have expressed this to us using minor variations on a single phrase: "all that matters is to fund good people doing good work". Several others have said words to the effect of: "I admire your optimism, but the system never really gets any better, there's just more and more bureaucracy and 'accountability'". It's possible those people are correct. But the only way to determine that is to actively explore the question: what if there are truly transformative social processes waiting to be discovered?

We'll proceed on that assumption for now, and return at the end of the essay to reconsider whether it is true. For now, let us merely recount an anecdote from the legal scholar and computer scientist Nick Szabo22. Szabo writes of how, during the early Renaissance, exploring the oceans was an extremely risky business. Ships could run aground, or be blown badly off course by storms. Sometimes entire crews and ships and cargoes were lost. There were risks at all levels of an expedition, from the health and livelihood of individual sailors through to financiers who faced ruin if the ship ran aground or was badly damaged. But, Szabo points out, this risk profile changed considerably in the 14th century, when Genovese merchants invented maritime insurance: for the cost of a modest premium, the people financing the expedition would not suffer if the ship was damaged. This spread the risk, and made the expedition much less risky for some (though not all23) participants. That change in the funding system helped enable a new age of exploration, discovery, and prosperity.

It is easy to imagine a salty Genovese sea captain, upon being asked how to improve shipping, saying that you "just need good ships, crewed by good sailors". This would be in the vein of our scientist friends telling us "just fund good people doing good work". It contains a large grain of truth, but is not incompatible with system-level ideas radically improving the situation. The salty scientists are correct, but only within a limited outlook. Research organizations do need to be maniacal about funding good people with good projects; they can also make system-level changes which have much more profound effects. This essay is about such system-level changes.

Put another way: we believe our salty scientists are blind to just how strongly systems and social processes shape creative work. This isn't because they're unimaginative. Often, it's because the systems change they've seen during their own careers is mostly bureaucracies making themselves happier (and often everyone else unhappier), with more red tape and demands for accountability. Naturally, such scientists are cynical about the prospects for improvement. We hope to make a convincing case that much more unconventional changes are possible, resulting in a profoundly different and better discovery system.

A variation of this line of critique is that the current social processes of science already produce many terrific outcomes. This is certainly true! It's a humbling experience to talk to the best scientists: what they can do is genuinely astounding. And you think to yourself: "this is it, these are extraordinary human beings, being used by humanity to near their full capacity". It's a wonderful achievement of humanity that we do support such people. And it's reasonable to ask why we'd need anything else. Why not just scale this up? Indeed, sometimes when talking with such people we encounter friendly or skeptical bewilderment. For them and their friends, the current system works well, and they don't see the need for anything different. But perhaps there are very different types of scientist (and scientific work) who could also achieve astounding things in science, perhaps achievements which the current system is unknowingly bottlenecked on, because their personality type doesn't thrive within that system? And perhaps they, and their approach to science, would thrive if there was more structural diversity in the social processes of science? This is a central point to which we shall return.

At first glance, this essay may appear to be an entry in that flourishing genre, what's-wrong-with-science-and-how-to-fix-it? This genre is well represented on social media, in conversation among scientists, and in articles in the scientific and mainstream media. "Here are the things wrong with peer review [or grant agencies, or universities, or etc…] – and how to fix them." Indeed, the genre is not new: you can find discussions of these issues going back decades and even centuries. Each generation confronts the problems anew, and proposes solutions anew. But although there is no shortage of grand hopes and plans, progress is often slow.

Our point of view is different in a crucial way: we are not proposing a single silver bullet. We believe the opportunity is far larger. What we want is a flourishing ecosystem of people with wildly imaginative and insightful ideas for new social processes; and for those ideas to be tested and the best ideas scaled out. We will show, in part by example, that there are many different possible approaches to fixing peer review [or grant agencies, universities,…]. Instead of believing we already know the answers, and just need to implement them, it's better to develop a discovery ecosystem which can rapidly improve its own processes. The fundamental underlying questions are: How do social processes in science change? Is there a general theory of such change? Is it possible to speed up and improve that change? This subject isn't fashionable in the same way as yet another proposal for "how to fix grant agencies" or "how to fix universities". But we believe it is a fundamental issue in the way human beings make discoveries, is a central problem of metascience, and at the very core of science24.

As a final note, we've used informal language throughout the essay, and that may make some readers mistake it for journalism. But while it is in part a synthesis, that isn't the primary intent. Rather, it's intended as a creative research contribution: a broad vision of the purpose and potential of metascience, and how it can change science. We introduce terminology and simple models for many key elements of metascience, and sketch many core problems. Our arguments unavoidably sometimes use speculative and incomplete reasoning. We shall borrow from many existing fields, but our work isn't primarily intended as a contribution to those fields. Rather, it's intended as a sketch of part of the emerging proto-field of metascience, to help it along the way to becoming a fully fledged field25.

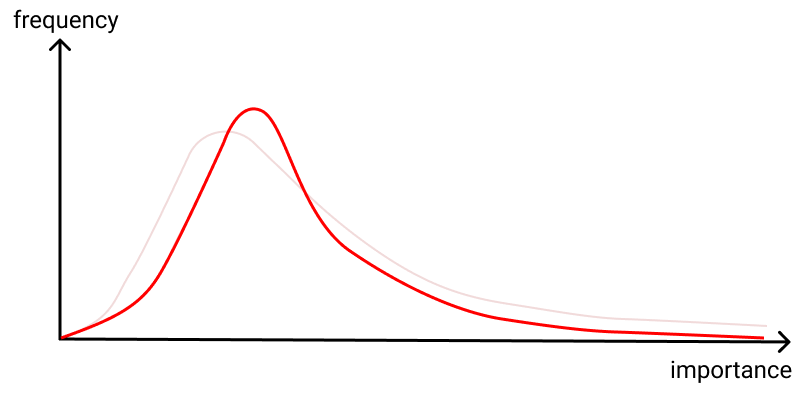

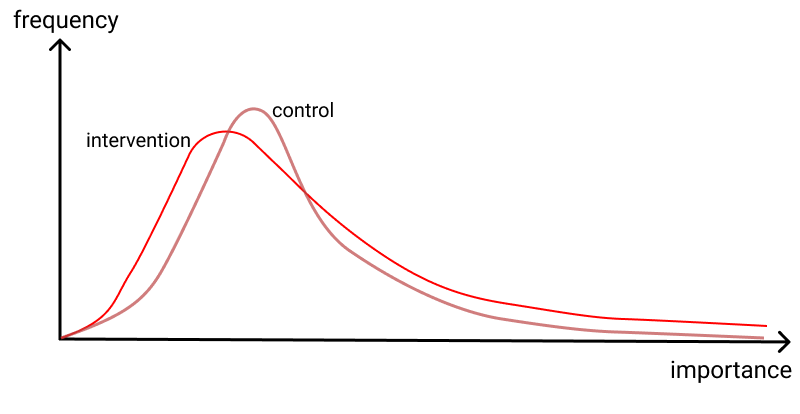

One conception of metascience is that it's about fine-tuning science, making incremental tweaks to social processes such as peer review or grant-making. But we conceive of metascience differently, believing radically different and far better social processes are possible, and that the metascience design space is vast and mostly unexplored:

Indeed, we believe the design space is so large that exploring it will require decades or centuries, at the least. Still, in Part 1 we hope to evoke something of that grand size. We'll explore the space, focusing initially on simple program ideas that could be trialled unilaterally by some imaginative funder. Although this initial focus is restricted, it can be used to illustrate generative design heuristics that help explore in imaginative ways. Later we'll broaden our scope. Along the way we'll sometimes run across well-known ideas – things like the currently fashionable idea of funding lotteries, or the idea that one should fund people, not projects. But to keep the discussion fresh we'll also mention ideas that have only rarely been discussed, or which to our knowledge are novel.

Let's begin with a simple heuristic for exploring the design space. We call this the funder-as-detector-and-predictor model26, or just the detector-and-predictor model, for short. As the name implies, this is a two-part model. In one part of the model, we think of a science funder as a kind of detector or sensor27, a collective human instrument aiming to locate intellectual dark matter. That is, it aims to locate important ideas or signals present in the discovery ecosystem, but ignored by existing funders. For example, the Century Grant Program aims to elicit a class of previously invisible intellectual dark matter – ideas for projects that should last a century or more. There may be many great ideas for such projects out there; there may be few such ideas. We won't know unless we do a determined search! In this part of the model, crucial questions to ask include: what types of important signal are present in the system, but are currently ignored? Is there information which is systematically being hidden; and, if so, how might it be elicited? And what new mechanisms can we develop to locate and amplify signal28? Concretely: what do the body of scientists know that is important, and yet currently either invisible (or not visible enough) to funders? And how can we surface that information?

In the second part of the detector-and-predictor model, funders are thought of as predictors, trying to predict future outcomes. In particular, they use some inference process to make decisions about an uncertain future, on the basis of incomplete current information. (This is the underlying problem to be solved in funding discovery.) As an example, the idea of high-variance funding is based on a simple change to the inference method used to make decisions: instead of using typical or average scores, use the variance in scores to help decide which proposals to fund. In this part of the detector-and-predictor model, crucial questions include: what information might we collect? What hedging and aggregation and indirection strategies might be used? Where is there asymmetric opportunity? Or opportunity for unique marginal impact? What are the possible contractual designs? Where is the risk, and how can it be moved and transformed?

Many of the suggestions we made in the introduction may be understood through the lens of the detector-and-predictor model. We already mentioned the Century Grant Program and high-variance funding, but many others may also be viewed this way. For instance: failure audits are about observing the outcomes from the inference model, in order to determine whether it's achieving some desired end, and using incentives to change the model used. Or: the pull immigration program is about surfacing previously invisible intellectual dark matter. Many of the programs involve both parts of the model: high-variance funding changes, as already mentioned, the prediction method, but will also likely elicit different types of grant proposal, encouraging people with riskier ideas to apply. In this sense, what you detect and how you predict are interwoven. More broadly: it's stimulating to simply look through the earlier examples, and see how the model applies (or not).

The detector-and-predictor model is not intended to be universally applicable, nor to be literally correct as a descriptive model. Rather, it's a generative design heuristic, to help generate plausible, interesting program ideas. By playing with the model you can easily generate an endless supply of potential programs. To illustrate, let's describe four more programs motivated by a view of the funder as a detector searching out intellectual dark matter.

Endowed professorships by 25: Many of history's great scientists made key discoveries while very young. This was true, for example, of giants such as Newton, Darwin, and Einstein. It's also been true more recently: think of Joshua Lederberg, discovering bacterial conjugation at the age of 21, or Brian Josephson, discovering the tunneling current between superconductors at the age of 22. Concerningly, in recent times the age at which scientists can establish independent research programs has substantially increased29. Often, people who begin research in their early 20s can't establish independent programs until their late 30s or 40s(!) Instead, they work on someone else's research program, or leave science. The thesis of this endowment program is that it will unlock latent potential for discovery if we give some young people full independence to follow their ideas. To this end, establish endowed professorships (and associated project support) at a few outstanding institutions, say Harvard and Cambridge, for promising scientists no more than 25 years old. If even a few recapitulate the success of a Lederberg or a Josephson such a program would be well worth it30.

Focused Research Organizations (FROs): First trialled in 2021, these are organizations of scientists and engineers which "require levels of coordinated engineering or system-building inaccessible to academia", often tens of millions of dollars31. They aim to produce a well-defined tool, technology, or scientific dataset. Examples include: E11 bio, developing tools to make it relatively easy and inexpensive to map mice brains, down to the level of single synapses; and the Cultivarium – most work in synthetic biology has been focused on a few model organisms, but the Cultivarium is developing tools to make synthetic biology routine in a much wider range of organisms. At first glance, FROs seem similar to endeavors such as LIGO, the LHC, and the human genome project, each of which also involved large-scale science and engineering in pursuit of well-defined ends. But in the past such endeavors were conceived and funded on a bespoke basis. The innovation of FROs is that they are a scalable way of eliciting and creating such entities; the underlying thesis is that such a scalable means would reveal many valuable FROs that are currently latent. It is (again) a mechanism to search out and activate a kind of intellectual dark matter32.

Para-academic Fellowship: A Fellowship for people to do independent research work outside academia. The underlying thesis is that there are many such people who have extremely unusual combinations of skills, skills unlikely to be found in academia, but which may enable important discoveries. Think of people such as Jane Jacobs, Judith Rich Harris, and Robert Ballard. Indeed, if we go back further in time, think of the young Albert Einstein, during his time in the Swiss Patent Office. Again: this is building a detector for a class of undervalued intellectual dark matter, and then funding whatever seems most promising33.

Discipline-switching Fellowship: To make it easy for outstanding scientists to change fields. We have met many scientists who have no trouble getting funding in the field they are currently working in, but who tell us they would prefer to be working in some other field. This is strange: they have funding to work on projects they're not so keen on, but not for things they consider more promising. Sometimes they have some special insight or edge that they feel gives them an advantage in their desired new area. Others feel they have run the course of what they have to contribute in their current field. Whatever the reason, funders currently largely ignore this information: it is, yet again, intellectual dark matter. Surfacing and acting on it will help people better use their talents. Done at sufficient scale, it would help established but moribund fields die. And it would also surface useful aggregate information: if people are unexpectedly going into unglamorous fields or leaving high status fields, that's a striking signal that something is afoot. Many small-scale programs along these lines are already done; it would be interesting to make, say, 50,000 such grants each year, providing a huge injection of disciplinary liquidity into science34.

As with the program ideas suggested in the opening section, we're not claiming that any one of these program ideas would revolutionize science. Indeed, we're not even claiming any particular one would work well at all; some might work quite poorly (though we're not sure which!) A healthy discovery system should trial a profusion of ideas, including many which fail; that's what it means to be trying risky things. We do believe it's worth trialling all the ideas above, and many more. Conducting such trials would help answer an immense variety of questions, things like: how much demand is there for discipline switching? What are the resulting flows of scientists between disciplines? What determines those flows? How well do young people perform as Principal Investigators? Are there systematic differences in the directions they explore? And so on, a cornucopia of questions, partial answers, and useful data. In that sense, even "failed" programs would be successful: they will contribute crucial knowledge to our understanding of metascience. And in the event that one of the programs works strikingly well, it can be scaled up. It may even begin to change the culture of science.

There is a particular sense in which existing funders already change their "detector": they actively search for new research subfields to fund. Consider, for instance, the way the NIH systematically expands their panel areas. Or the way DARPA searches for technological whitespace. But the notion of intellectual dark matter goes much further than that35. The unifying motivating question is: what does the body of scientists know that is important, and yet is currently either invisible (or not visible enough) to funders? For example, FROs are not principally about expanding the range of fields being considered; rather, they are about a change in the structure of scientific problem which may be attacked at all. How do you know such intellectual dark matter exists? You can't. But the success of bespoke prior projects such as LIGO, the LHC, and the human genome project at least suggest it's worth looking. Similarly, the Discipline-switching Fellowship isn't about expanding the range of fields considered, but rather about making use of scientists' knowledge of their own comparative advantage. And again, this is suggested by famous examples: Francis Crick from physics to molecular biology; Ed Witten from mathematics to physics. And so on. If you talk with individual scientists, and appreciate the barriers preventing such switching, you realize the intellectual dark matter exists, and a scalable Discipline-switching Fellowship is natural. Such intellectual dark matter is replete in the history and current practice of science. By searching out specific examples, it's possible to identify many more programs in the vein of those above36.

The programs just described were generated by thinking of funders as detectors. What if we focus instead on funders as predictors, trying to develop new inference procedures to make decisions? Again, there are an immense number of ways this can be done. Here's three program suggestions based on changed prediction methods:

Elicit-the-secret-thesis: Sometimes a scientist undertakes a project because they have some special secret, something they know that no-one else fully appreciates, giving them a unique competitive edge. Feynman talked about the necessity of having "to think that I have some kind of inside track… that I have some special mathematical trick that I'm going to use that [my competitors] don't have, or some kind of a talent"37. But if you're a scientist with such an edge, you're in a bind when it comes to funding. You don't want to disclose this special edge in a peer-reviewed grant: you may be telling competitors your best ideas! Thus: the standard peer review procedure sometimes suppresses the information that would be most useful for making decisions. It's an inverse market for lemons38, a form of asymmetric knowledge where perverse incentives inhibit the applicant from revealing how good their idea is. Of course, some projects have competitive edges of a less easy-to-copy nature (e.g., special equipment or personnel). But not all. A grant application could have a short separate section, where scientists are asked to describe any secret competitive edge they have. The funder would promise this secret thesis is seen only by the (professional, not peer) program manager. The secret thesis could then be used as an input in the decision-making; sometimes, it would be the decisive input.

A quota for young program managers: Suppose 50 percent of program managers were required to be appointed before age 28, and only allowed to serve for five years. Perhaps offer program manager jobs to all the Hertz or NSF Graduate Fellows at age 27? How would that change the nature of decisions made? Conventional wisdom says it requires age and experience to make good decisions. Perhaps that wisdom is correct. But the thesis here is that younger program managers would make systematically different decisions to today's incumbents, an effective change in inference method, and perhaps with some advantages relative to the current situation. It would also be interesting to try similar ideas at other levels of a funder, perhaps at the level of the overall CEO or Director. What would a 25 year-old Director do differently, compared to the 50-, 60- and even 70-somethings common now at most funders?

A "Nobel prize" for funders: The early stages of important discoveries often look strange and illegible: people grappling with fundamental ideas in ways at the margin of, or outside, conventional wisdom. Since such projects often look like anything but sure bets, there is a strong incentive for funders to delay support – just when it is most needed – in order to avoid looking foolish. This is especially true of individual program managers, who naturally shy away from funding things that may later seem silly or frivolous. It's striking to contrast this situation with venture capital, where there is a strong incentive to fund in the earliest stages, when stock is cheap because of the uncertainty; the net result is more chance of looking silly when things fail, but also a much larger windfall if things work out39. It's interesting to think about ways of rewarding the science funders – especially individuals – who are first to put their own reputations on the line to support such projects. This can be done in many ways: one natural way is to create one or more prizes to publicly recognize such brave funders.

We could explore the generative power of the detector-and-predictor model at much greater length. Each element can be riffed upon endlessly, generating many more program ideas. But that's not our purpose in this essay. Rather, the model is here as an example of a generative design heuristic which can be used to explore one (tiny!) part of the metascience design space. Let's now discuss more briefly several other such design heuristics. Each provides a different way of exploring that design space, while illustrating the broader idea of metascience as an imaginative design practice.

The detector-and-predictor-model poses the question: where is there intellectual dark matter, and how to detect and amplify it? The underlying thesis is that there are many such types of dark matter, and by identifying new types we can activate untapped latent potential for discovery. Now, let's briefly discuss four other metascientific questions motivating different ways of exploring the design space. Each question needs an essay or book of its own, and could generate dozens or hundreds of program ideas, each with its own in-depth treatment40. But we believe these brief descriptions evoke what is possible, and help convey different theses about where there is latent potential for discovery.

How to 10x the rate at which new fields are created? The physicist Paul Dirac once said that the 1920s, when quantum mechanics was discovered, was a period in which it was very easy "for any second-rate physicist to do first-rate work"41. Indeed, history suggests the early days of new scientific fields are often golden ages, with fundamental questions about the world being answered quickly and easily. By contrast, later work often requires far more effort to make progress on more incremental questions. As Dirac said decades after the discovery of quantum mechanics: "There has not been such a glorious time since. It is very difficult now for a first-rate physicist to do second-rate work." We believe science is badly bottlenecked on field formation. In particular, we believe current social processes in science are designed to support work in existing fields, but strongly inhibit work critical to the creation of new fields. What programs would dramatically increase the rate of production of fruitful new fields? (And how to simultaneously avoid the creation of unfruitful Potemkin fields, animated by money rather than deep ideas?) Such programs would support ideas which are: in their very early stages42; illegible through conventional processes; outside standard disciplines; and from unusual sources. To develop such programs would mean understanding early signals that a new field is stirring, and designing to detect and support those signals. We believe many such signals are actively selected against in existing funding programs, and selecting for them would unlock tremendous latent potential for discovery.

How to greatly increase support for high risk, high reward projects? This is based on the idea, popular though not universal among scientists, that an enormous opportunity in science is to increase support for high risk, high reward research43. As we mentioned earlier, funders often talk about "high risk, high reward" research in their marketing brochures, but it's often merely words and research theater, not actual risk. To develop programs to support genuine risk means developing commitment mechanisms that tie allocation of resources to risk. We've already seen some design ideas that can help: measuring risk, bundling and unbundling and moving it around, through ideas like insurance and hedging; improving scientists' fallback options (one of many possible means of de-risking); increasing variation and disagreement; increasing pressure for a thesis identifying a unique competitive edge. These ideas arose incidentally as we developed other ideas. Pursued for their own sake, they could be developed much further, and other primitives introduced. It's notable that existing science funders seem to be bringing little urgency to the pursuit of such ideas: for instance, for more than a decade we've heard funders talk about "taking a portfolio approach". None, that we know of, have actually gone beyond largely toothless informal heuristic approaches. Indeed, they are not even systematically using the simplest of tools – things like dollar cost averaging, routine portfolio rebalancing, insurance or hedging, much less more sophisticated ideas. Institutional investors in the financial markets take risk seriously, and routinely use such ideas. Science funders could and should develop analogous ideas. Risk can potentially be designed, and the relationship to reward understood44.

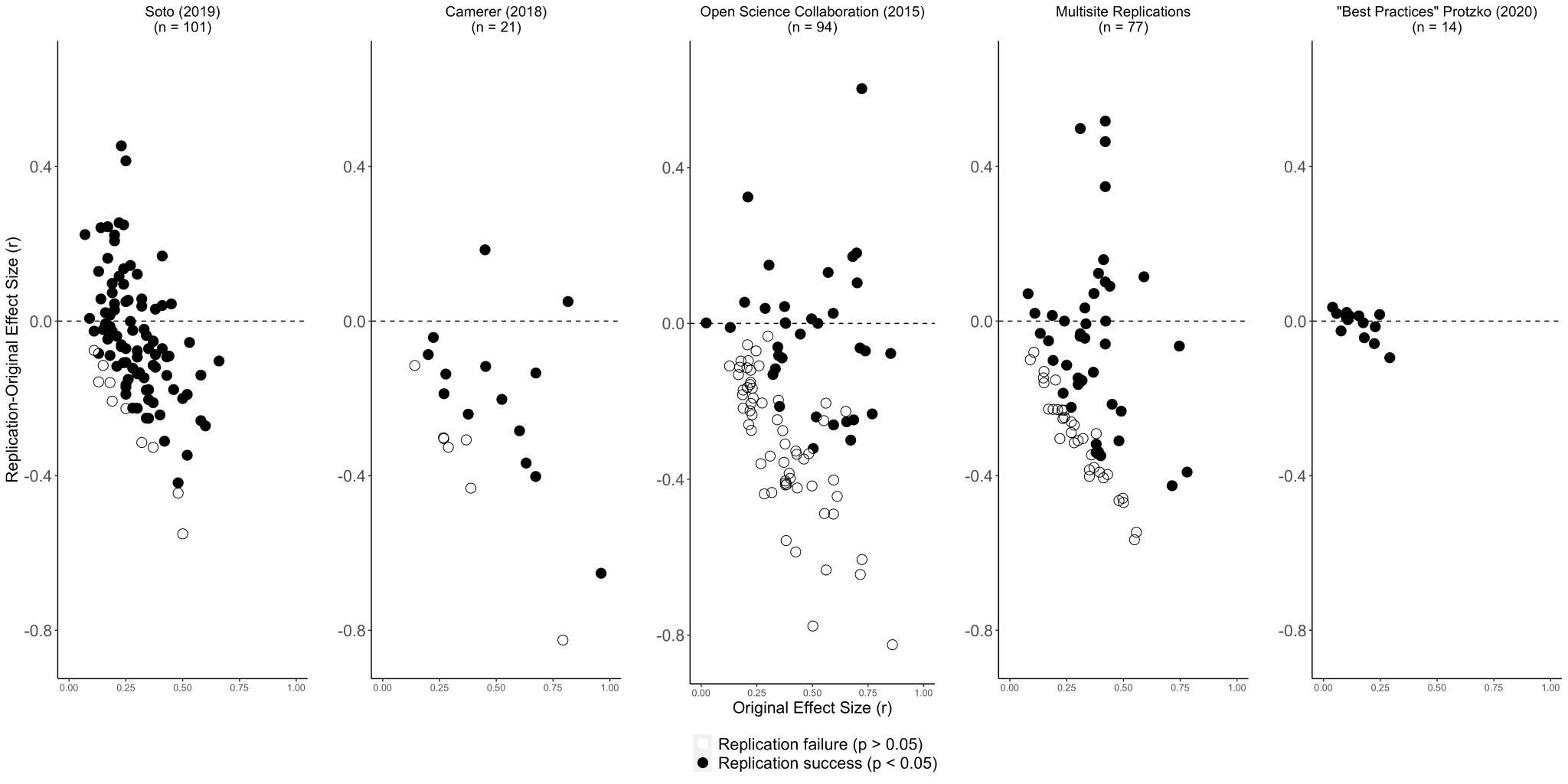

How to fix the problems with hypothesis testing? (The reproducibility movement) Hypothesis testing has been widely used in science for decades, and plays a central role in many fields. It has been known almost as long that it is susceptible to questionable research practices: p-hacking, the file drawer effect, and many others. The meta-researcher John Ioannidis has used this to argue that "most published research findings are false"45. The rough claim was that a large fraction of results in many fields amount to little more than statistical noise. In the 2010s a small cadre of people seriously developed a suite of greatly improved research practices that aimed to make those fields much more reliable. This work is ongoing, but initial results are encouraging, and we discuss this work more in Part 2 of this essay. The underlying thesis is that these changed practices enable much stronger foundations for many fields, and consequently a reliable accumulation of knowledge. That may sound dry, but it parses out close to: it means these fields can begin to make reliable, cumulative progress. And that's an enormous, transformative change46.

How to ensure scientists share results openly, so others may build on their work? (The modern open science movement47) In the 17th century, scientists often kept their discoveries entirely secret, or would "publish" accounts only in the form of anagrams, which could later be unscrambled to establish priority over competitors. Early metascientists such as Henry Oldenburg realized science and humanity would be far better served if scientists openly shared their discoveries. They worked hard to establish the scientific journal system, a kind of collective long-term memory for humanity, one that enabled scientists to build on each others' results. Modern computer networks radically expand the possibilities for open science, enabling scientists to build on each others' ideas, data, and code in formerly unprecedented ways. This enables an ongoing restructuring of how scientists work, both individually and collectively.

There are many other plausible questions one might ask, other generative heuristics to explore the design space. Even more briefly: (1) In biology evolutionary innovation often follows catastrophe; this happens in markets too; would it lead to an explosion of discovery if we temporarily but drastically decreased (and then increased) funding to entities such as the NSF? We don't expect this suggestion to be popular, but that does not mean it's wrong. (2) Can we use cryptoeconomics to radically improve the political economy of science, creating far stronger alignment between individual incentives and collective social good48? (3) Can we diversify exploration and unleash creativity by decreasing the amount of grant overhead, since overhead incentivizes universities to follow grant agency fashion, and is thus a strong centralizing force49?

These questions are different to "where is there intellectual dark matter, and how to detect and amplify it?" But each expresses a different design heuristic, helping us explore different parts of the metascience design space50. As we noted earlier, the value of those heuristics lies not in their descriptive correctness or universality51, but rather in their ability to help generate good new design ideas. Each is based upon a plausible broad thesis about where there is latent potential for discovery, and sketches mechanisms to unlock that potential. The greater the potential and the better it is activated, the more transformative.

Underlying these heuristics is a view of metascience as an imaginative design practice. It's a view very different from that common in the natural sciences, which are most often about more deeply understanding existing systems, or natural variations thereof52,53. Design, by contrast, is about inventing fundamental new types of object and action, which don't obviously occur in nature. Consider Genovese maritime insurance as an example. It wasn't a change to how ships were built, or sailors trained. Rather, it introduced a radical new interlinked set of abstractions – the insurance premium, counterparties, spreading of risk, insurance payouts. These are beautiful, non-obvious ideas, none of which naturally occur in the world. Rather, they were invented through deep design imagination. And despite being "made up", they transformed humanity's relationship to the world. This is characteristic of design imagination. It must have seemed to the Genovese that financiers were "naturally" reluctant to finance expeditions, given the risks, and that this was a fixed feature of the world. And yet design showed that this was an illusion, which could be radically changed.

We mention this because discussions of metascience often give short shrift to imaginative design. We often meet people who think metascience means studying relatively minor tweaks to existing social processes, for things like peer review, hiring, granting, and so on. Those minor tweaks are genuinely valuable, and can teach us much. But we believe that imaginative design can be at the core of metascience. That means inventing fundamental new primitives for the social processes of science. It means developing tremendous design imagination and insight and new ideas to explore the metascience design space. We believe the most important and powerful social primitives in this design space are yet to be discovered.

It's challenging to illustrate this idea of imaginative design as well as we should like. That's in part because truly imaginative design is hard. The individual program ideas we've described illustrate no more than rather modest levels of imagination. A few – ideas like the failure audits or tenure insurance or the Century Grant Program – do involve moderately striking new design ideas (not all ours!). But they're not as imaginative as maritime insurance was in its time. Still, we hope you'll think of individual program ideas like these as points in a pointilist sketch to evoke metascience as a design practice. And we're confident that deeper and more imaginative ideas are possible than anything we gesture at here. Developing such ideas is part of the challenge and opportunity of metascience.

There's no reason to expect scientists to be good at this sort of design. Scientists are users of the discovery system, not (for the most part) designers. There's no reason they should deeply understand how to improve it, any more than someone who drives a car should understand how to design and build a great car. A good driver will notice problems with their car, and may have important insights about cars. But that doesn't mean they'll understand the origins of those problems in the design, or how to fix them, or how to design new and better cars. Just because someone is good at science doesn't mean they have the skills of a good designer. Worse, they're sometimes convinced they know, and will ignore or hold in low regard people who actually have more insight about these things. It's all "soft skills", not "real knowledge of science", in this view, and so how could an outsider have anything useful to say? Contrariwise, just because someone has strong convictions about the social processes of science, doesn't mean they actually have much insight. Indeed, we confess to much self-doubt on this point. On this issue, humanity is still figuring out how to tell the difference between people with insight and people who merely have strong convictions about how science should be54.

We've been developing the idea of metascience as an imaginative design practice. In Part 2 we'll argue that this is one of three major components of metascience. The other two components are: (1) metascience as an entrepreneurial discipline, actually trialling and then scaling out new social processes; and (2) metascience as a research field, aimed at deepening our understanding of the social processes of science, in part as a tool to evaluate their impact on discovery. All three components must work together for metascience to be successful.

The point about scaling out deserves amplification. Our discussions so far have been of modest pilot trials that could be done unilaterally today. If such ideas were scaled out broadly, they would change science far more profoundly than the trials: they would change the culture of science, i.e., the ambient environment and background working assumptions scientists take for granted.

As a concrete example, suppose programs to support much higher risk work were scaled out broadly. Done well, the culture would change so that it was routine for scientists to pursue extremely ambitious and risky projects. Taken far enough, some scientists may begin to worry about their plans not being risky enough, rather than too risky55. This change in culture would have many follow-on effects: in how scientists choose what to work on; in how they develop and change over the course of their working lives; in the personality characteristics of the people who choose to go into and remain in science; and so on. We believe it's not an exaggeration to say that such a change would transform the culture of science. And it would be a qualitatively different kind of change than merely a trial program.

That's just one example. Many others could be given. But we trust the broader points are clear: (1) large cultural shifts are different in kind from unilateral pilot trials, even if the cultural shift is "merely" making widespread some idea of the trial; and (2) each of the trial ideas above have natural extensions as part of broader cultural changes.

Summarizing, and looking ahead, in the vision that will emerge, metascience is not just about the study of science, understanding descriptively what is happening. It has as a fundamental goal interventions to change science. And metascience is not only about incremental interventions. It is also about wildly imaginative design, conjuring new fundamental elements for social processes in science. This is what we mean when we say metascience is an imaginative design discipline. Furthermore, metascience is not just theoretical. That is, it is not just about new understanding (and papers), in the conventional academic mode. It requires building new organizations, new programs, new tools, and new systems. Only by such entrepreneurial building is it possible to test metascientific ideas, and to improve our understanding. That improvement is both valuable in its own right, and also improves what people can build in the future. At the same time, it is not sufficient just to build local systems. Metascience also requires moving from (comparatively) small trials to broader cultural changes in science. That is the main subject of Part 2 of the essay.

Imagine you're a graduate student with a brilliant idea to improve the way science is funded. Chafing under what you perceive as a flawed academic system, you learn about the history of science and alternate funding models. You talk with many scientists, and delve into alternate models of the allocation of resources – ranging over fields such as finance, organizational psychology, and anthropology. You develop and discard many ideas; over time, your ideas get more imaginative and insightful. And you gradually develop insights you believe would enable you to create a new funding agency, one that would, given time and resources, be vastly superior to the status quo. You raise pilot funding, and begin to operate. You identify some misconceptions in your ideas, and improve them still further. Suppose you did all this, and your idea genuinely was vastly better for science than existing funders, such as the NSF and NIH. Would your funder rapidly grow to be larger and more influential than the NSF and NIH? This has not happened in the modern era. Nor has it happened that such an outsider has rapidly grown a research organization to a scale outstripping Harvard and Cambridge and other incumbents. Examples like Janelia and Altos might seem superficially to be examples, but they are not: they didn't grow because they were better; rather, they were simply endowed in advance by wealthy donors. Indeed, the possibility of such growth happening seems almost ludicrous. Garage band research organizations don't grow to worldwide pre-eminence56. But we'll argue that this kind of change is both highly desirable and potentially feasible in science.

One tremendous strength of modern science is that a similar phenomenon does happen routinely with scientific ideas: outsiders or people with little power (e.g., graduate students) replacing established ideas with better ones. There are many famous examples. Think of the way the young and unheralded Francis Crick, James Watson, and Rosalind Franklin triumphed over Linus Pauling in the race to decode the structure of DNA. Pauling was the most famous chemist then alive, and he announced his (incorrect!) structure for DNA first; Crick, Watson, and Franklin were scrappy outsiders, apparently beaten to the punch. But they were right, and Pauling was wrong, and their structure57 was almost immediately accepted by the scientific community, including Pauling(!) Or think of the 22 year old Brian Josephson, whose work on superconductivity was publicly rebutted in a paper by John Bardeen, the only person ever to win two Nobel Prizes in physics. But Josephson was right and Bardeen was wrong, and the physics community quickly sided with Josephson. Or perhaps the most famous example of all: the 26 year old Albert Einstein, a patent clerk who proposed new conceptions of space, time, mass, and energy. And in almost no time his ideas triumphed over the old.

These examples are exalted. But something similar happens routinely at a much less exalted level. One of the best things a graduate student can do in their PhD is convincingly show that a celebrated idea of one of their elders is incomplete (often a gentle way of saying "wrong") or needs extension. That's the way careers are made, and the ideas of science are updated and improved. While this process is often bumpy, this replacement or improvement of scientific ideas is genuinely routine. It's foundational for science, and happens so often that it's tempting to take for granted. But it only happens because extraordinary institutions ensure that good ideas from relative outsiders can get a fair hearing, even when they contradict established wisdom58. The value of such decentralized change in ideas has been understood since at least the time of Francis Bacon, who argued for the primacy of experiment and against the received authority of Church and State. And it was baked into the Royal Society's motto, nullius in verba, take no-one's word for it, chosen in 1660, and still used today. Of course, our scientific institutions don't always achieve this ideal of supporting decentralized change in ideas! There are many cases – think of Lynn Margulis or Gregor Mendel or Alfred Wegener – where the establishment resisted new ideas long after they should have been taken seriously. But nonetheless our scientific institutions do remarkably well, protecting and testing novel ideas, and amplifying genuine improvements, even when they come from outsiders.

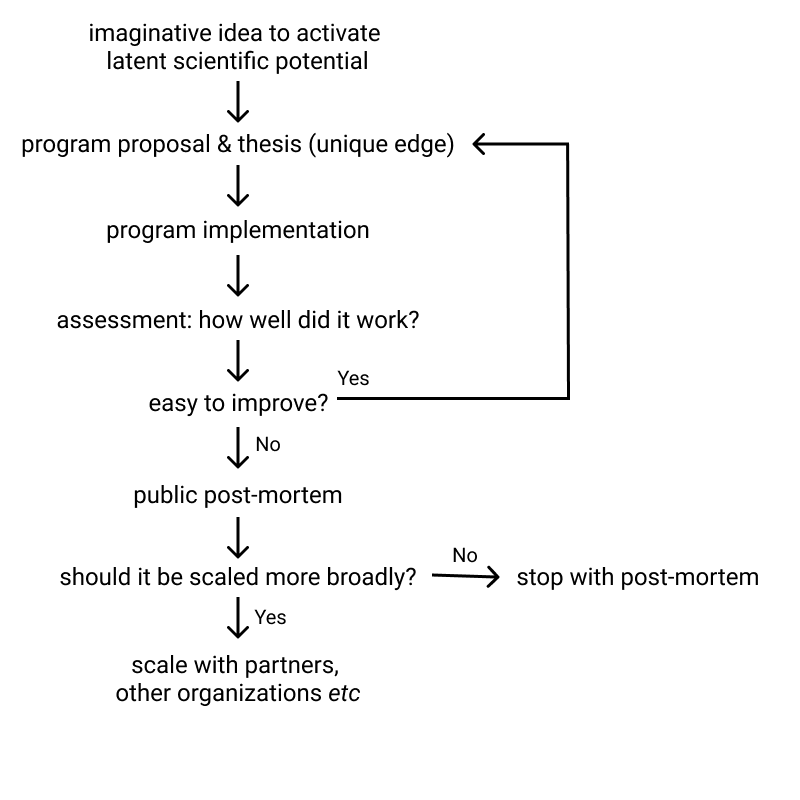

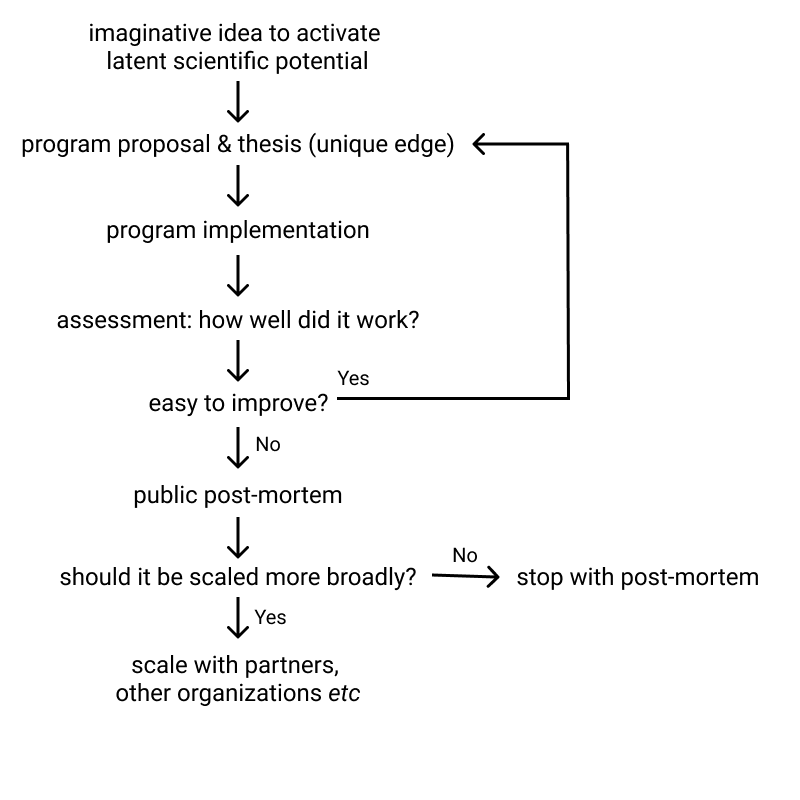

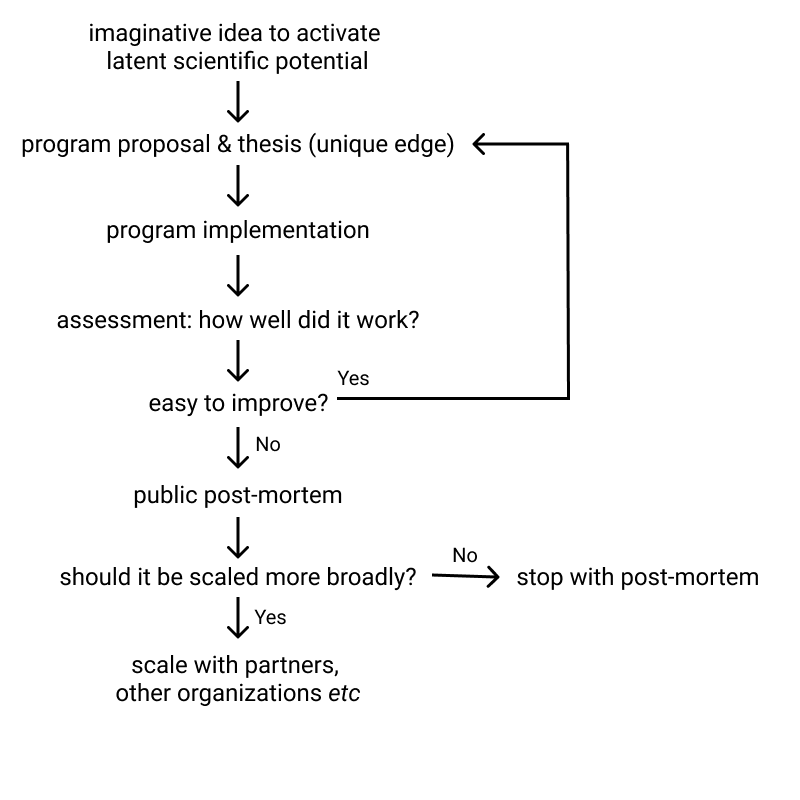

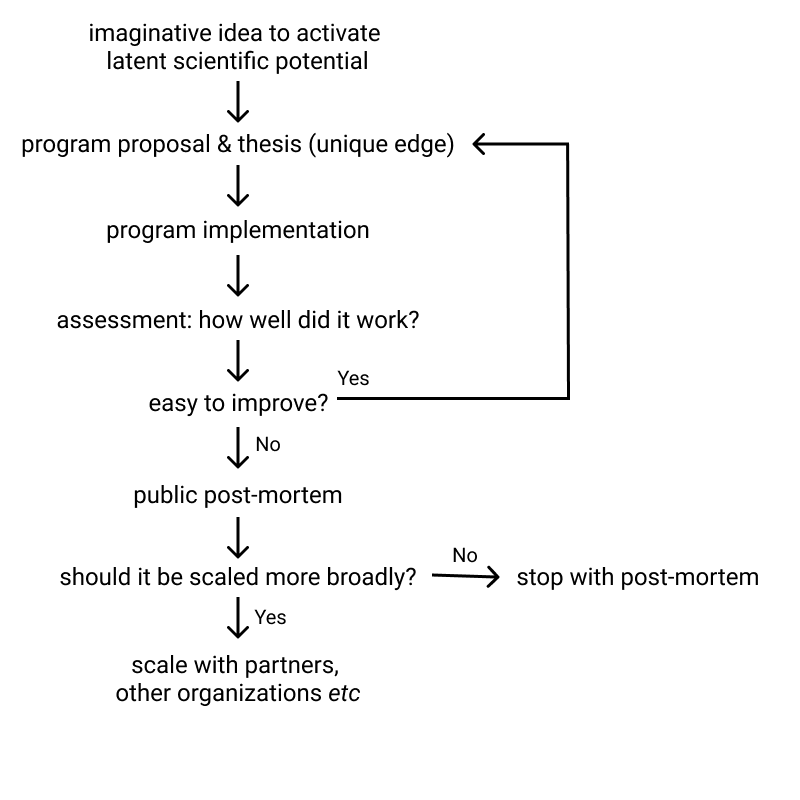

What about the analogous process for updating the social processes of science? We began this section with the concrete example of a graduate student attempting to displace today's funders or universities. But the idea can also be interpreted more broadly, to include widespread changes to social processes such as peer review, funding, hiring, and so on, such as the alternatives discussed in Part 1. In an ideal world there would be a means by which many ideas for new social processes could be easily trialled, and then taken quickly through the following metascience learning loop59:

Many of the steps in this diagram could be accomplished today by daring and imaginative funders. But some of the steps are very difficult to accomplish today. In particular, suppose you trial some new social process, and find it greatly superior to existing processes. How would you scale it? In this section, we argue that there are many strong forces inhibiting scaling, enough so that many existing social processes in science are in a state of near stasis, almost unable to change. More broadly, in Part 2 we'll discuss whether and how it is possible to move out of this stasis, so the social processes of science can be much more rapidly improved.

When we criticize (say) funders for being near stasis, we're often immediately told: "that's not true, funders try new processes all the time! Just look at all the interest in funding lotteries!" Funding lotteries are the idea that instead of deciding grant outcomes through a peer review process, they should fund grants at random from among the pool of applicants (usually after filtering out obviously kooky ideas). The hope is that this will increase the diversity of project ideas submitted. The idea seems to have first been seriously suggested by Daniel Greenberg in 199860. It's been developed over the quarter century since, and began to receive small-scale trials in the latter part of the 2010s. Now, in the early 2020s, funding lotteries are a fashionable topic of investigation. And it's plausible that over the next decade or so they will be widely deployed, although we doubt they'll become the dominant mode of funding.

Funding lotteries are genuinely interesting, and we're pleased to see serious trials. But we're unimpressed by them as a rebuttal to the charge of stasis. They're rather the exception that proves the rule. It's hardly dynamism when it takes a quarter century to get widespread interest in and serious trials of one idea! There should have been (at least) a hundred ideas equally or more ambitious trialled over the same period. A flood, not a trickle. Most of those ideas would have failed, or been qualified successes. And, ideally, a few would have succeeded so decisively that they'd now be deployed at scale. Funding today ought to look vastly different than a quarter century ago. Not just different in the sense of making the administering bureaucracies happier – more process, more red tape, more requirements for "accountability". No: vastly scientifically better, enabling an explosion of discovery. When The New York Times ran a laudatory 2020 article about encouraging results from small-scale trials of funding lotteries, they briefly noted the response of the NSF and NIH: "The U.S. National Science Foundation and the National Institutes of Health say that they have not tested lotteries and don't currently plan to do so." Improving their approach isn't a top institutional priority and matter of urgency for those agencies. It's something to do only if it doesn't interfere with other priorities.

We believe there are four main reasons scalable change to social processes is hard in science. First is the centralization of control over science in a small number of large funders and influential research institutions61. If you ask scientists to tell you how granting, hiring, and peer review are broken, many will tell you about a plethora of problems, and make suggestions for improvement. Unfortunately, many of the suggestions are of the form: "The NIH [or NSF or Nature or Harvard or one of a small handful of other organizations] should [do such-and-such]". This may work if the Director of the NIH or the NSF throws their weight behind the proposal. But that happens rarely. When most resources are under the control of a few organizations which are not designed to undergo radical organizational change, those organizations are a bottleneck preventing improvement.

Second, in many cases there is no single organization or person who can make a change. Again, you hear: "The system needs to change the incentives [or norms or processes] in [such-and-such a way]". Even when true, no-one is singly responsible for processes such as peer review, or the importance of high impact journals, or etc. You can't get a meeting with the Director of Science-as-a-Whole and convince them to throw their weight behind a change. They are, instead, collective action problems. This does not mean single individuals can't have a big impact: if the Director of the NIH went on the warpath against impact factor, for instance, they could make a big difference. But it's still a community-held norm, and requires collective change. Of course, you may complain privately that the incentives are not right, and "something should be done"62. But while that may blow off steam, sensible working scientists mostly just get on with their scientific work.

The third factor, which reinforces the first two, is network effects that homogenize social processes. Over and over we hear variations on: "I'd like to try this new thing – publishing in an unconventional way, supporting students in high risk or unfashionable work, changing to an unfashionable field – but I have a responsibility to my students and collaborators to toe the line right now." There's a tyranny of the community: people won't try unusual things, since their community would look askance; the unusual things therefore never get serious attention; as a result, the community thinks poorly of those very possibilities. Such communities have mechanisms designed to help them collectively change their scientific ideas, but no similar mechanism for changing their social processes. This is compounded by a shadow of the future: people worrying about imagined future judgments of their community. Suppose, for example, someone wishes to experiment with sharing their scientific results in a non-standard way: they must weigh that desire against the (imagined) future negative judgment of some hiring or granting committee. This may seem a small thing, but community judgment is so important in science that it strongly inhibits experimentation.

These three factors badly bottleneck change within existing institutions. The obvious workaround is to build new institutions which by fiat may ignore the first two factors. Such new institutions could, for example, simply declare that publication in high-impact journals is forbidden to employees, or engage in practices strongly encouraging high risk work. But when this is done, the third factor – network effects – influence the startup institution even more strongly. Scientists considering working for Jazzy Not-for-Profit (or for-Profit) Startup Institute must ponder: do they really want to give up publication in high impact journals? Or to work on risky projects that may not pan out? Or to do anything else which violates the norms of their scientific community? If they ever decide to leave their Jazzy Startup Institute job, won't they then have a tough time finding another good job? After all, other potential employers haven't changed their standards, just because Jazzy Startup Institute has. The shadow of the future looms strongly for such ventures, causing a kind of regression to the institutional mean. One of us (MN) has worked in many unusual startup research organizations. The perennial question within such organizations is: if I hew to local aspirations, will that damage my chances of getting a job anywhere else?

Now, such forces could be overcome if Jazzy Startup Institute seemed likely to grow to be far larger than others, so its standards became dominant and replaced those of the existing community. But there's a fourth bottleneck, which is that there's no natural feedback loop driving growth in new institutions. In particular, even if a new institution is scientifically outstanding, that does not mean it will grow to be much larger than existing institutions. Between these four bottlenecking factors, the discovery ecosystem can only change many of its social processes very slowly.

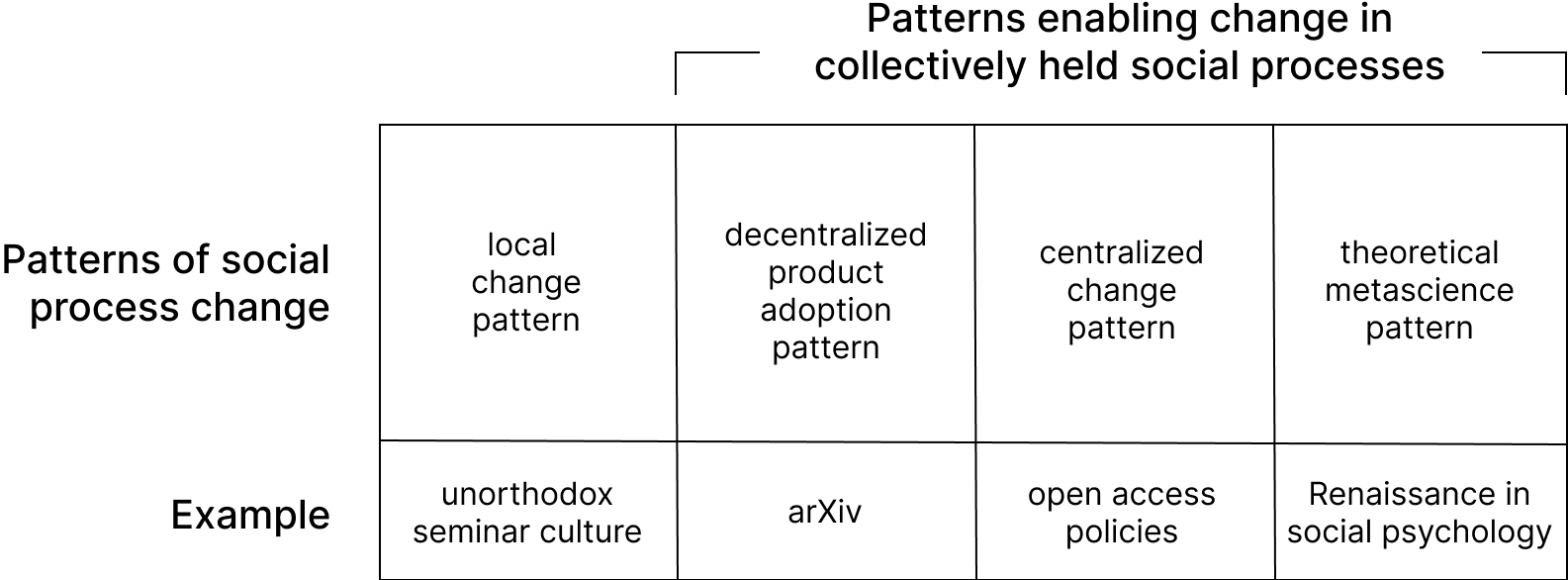

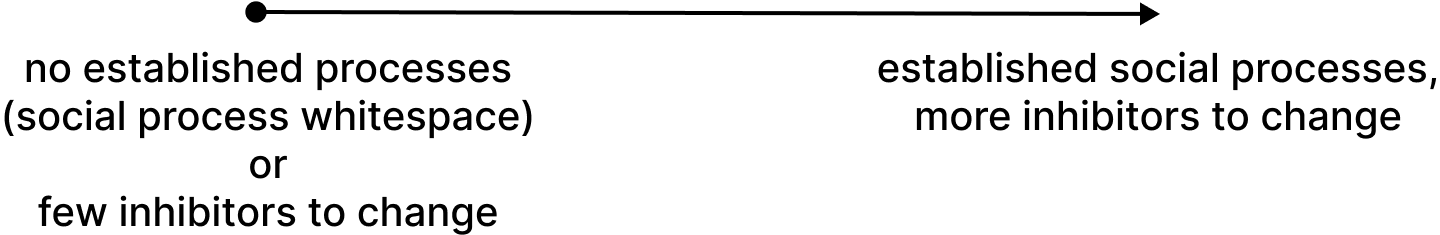

With that said, these bottlenecks only apply to change in certain kinds of social process: those which are collectively held. It is often possible to change social processes when they're not controlled by central agencies, or network consensus, or strongly influenced by community judgment or the shadow of the future. And when we look empirically, we see lots of variation in labs and institutions in just these ways. We've seen labs with very unusual approaches to mentorship, for example, or to hosting visitors, or to seminar culture63, and so on. Such variations in social process fall outside the bottlenecking forces mentioned above, and so can be changed unilaterally. Such changes are of great interest, both from the practical point of view of doing better science, and as objects of study within metascience. But they're not within the scope of this essay. In this sense, the essay is about a vision of a particular aspect of metascience, that aspect concerned with improving social processes that are collectively held, and so subject to these bottlenecks. For the most part, we'll omit the "collectively held" from "collectively held social processes" through the rest of the essay, though it should be understood.

We've argued that stasis affects collectively held social processes in science. This stasis may be illustrated in many ways, and we'll now briefly mention a few. These examples are not meant as dispositive evidence, but rather are merely plausible illustrative examples.